Capitalists use new high-tech tools to pile up their cash.

Unions are beginning to organize online.

What happens, though, when the computers start deciding for themselves what they will do?

NPR recently devoted some space to exploring this issue.

Some in the world of computer nerds call it the Singularity: the leap into the unknown that will happen when artificial intelligence learns how to improve itself, setting off an exponential “intelligence explosion.”

Rejecting what most progressives worry about, these computer experts say the Singularity is a greater threat to humanity than global warming or nuclear war.

An out-of-control computer, they say, is as likely to end life as we know it as either climate change or nuclear war.

The possibility first occurred to me in the late 1960s in a Brooklyn movie theater as I watched the Hal 9000 computer in “2001, A Space Odyssey” tell the only astronaut it had not yet killed: “I know I made some poor decisions recently but I can give you my complete assurance that my work will be back to normal.”

Now, in 2011, some computer experts believe we are really close to the day when we invent artificial intelligence that will figure out how to give itself more capabilities.

The downside of that incredible day, they say, will be that it could be the last thing we ever invent.

The idea is that humans will invent a computer that will be able to examine its own source code and say, “If I change this or that, I will get smarter.”

.When it gets smarter it gains still newer insights, makes more changes and ends up as an incredibly intelligent thing just exploding with more intelligence. Humans won’t be able to keep up! (Remember “Terminator 2” and “The Matrix”?)

Some of the nerds say the Singularity will be worse than in those movies. Rather than leaving a few people to hide under decayed buildings where they dodge laser beams for the rest of their lives, the computers might wipe out human life altogether, these experts say.

“All the people could end up dead,” says Keefe Rodesheimer, software engineer at the Singularity Institute for Artificial Intelligence.

His institute is no joke. It rakes in big money from contributors like Peter Thiel, co-founder of PayPal.

The donors say they are exercising the forethought necessary to prevent that ultimate future disaster. One of their big concerns is how to invent an “off switch” for a monster like the Hal 9000 or its much tinier future descendants.

At the risk of sounding like an old-time radical, however, I still believe that the big issue we face with the new technology, as with all previous technologies, is who controls it and for what purpose.

I think money would be better spent harnessing the new technologies to feed the hungry, improve agriculture, halt climate change, disarm warheads, improve schools and health care and yes, even to adopt stray animals.

If we fail to do these things we could end up terminating life as we know it long before the new generation of Hal 9000s gets the jump on us.

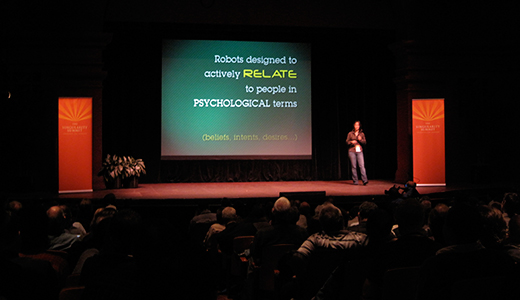

Photo: A presentation at the 2008 Singularity Summit in San Jose, Calif. Alexander van Dijk CC 2.0